Plotting HRRR 2-meter temperatures

Plotting HRRR 2-meter temperatures¶

Overview¶

- Access archived HRRR data hosted on AWS in Zarr format

- Visualize one of the variables (2m temperature) at an analysis time

Imports¶

import xarray as xr

import s3fs

import metpy

import numpy as np

import matplotlib.pyplot as plt

import cartopy.crs as ccrs

import cartopy.feature as cfeatureWhat is Zarr?¶

So far we have used Xarray to work with gridded datasets in NetCDF and GRIB formats. Zarr is a relatively new data format. It is particularly relevant in the following two scenarios:

- Datasets that are stored in what’s called object store. This is a commonly-used storage method for cloud providers, such as Amazon, Google, and Microsoft.

- Datasets that are typically too large to load into memory all at once.

The Pangeo project specifically recommends Zarr as the Xarray-amenable data format of choice in the cloud:

“Our current preference for storing multidimensional array data in the cloud is the Zarr format. Zarr is a new storage format which, thanks to its simple yet well-designed specification, makes large datasets easily accessible to distributed computing. In Zarr datasets, the arrays are divided into chunks and compressed. These individual chunks can be stored as files on a filesystem or as objects in a cloud storage bucket. The metadata are stored in lightweight .json files. Zarr works well on both local filesystems and cloud-based object stores. Existing datasets can easily be converted to zarr via xarray’s zarr functions.”

Access archived HRRR data hosted on AWS in Zarr format¶

For a number of years, the Mesowest group at the University of Utah has hosted an archive of data from NCEP’s High Resolution Rapid Refresh model. This data, originally in GRIB-2 format, has been converted into Zarr and is freely available “in the cloud”, on Amazon Web Service’s Simple Storage Service, otherwise known as S3. Data is stored in S3 in a manner akin to (but different from) a Linux filesystem, using a bucket and object model.

To interactively browse the contents of this archive, go to this link: HRRRZarr File Browser on AWS

To access Zarr-formatted data stored in an S3 bucket, we follow a 3-step process:

- Create URL(s) pointing to the bucket and object(s) that contain the data we want

- Create map(s) to the object(s) with the s3fs library’s

S3Mapmethod - Pass the map(s) to Xarray’s

open_datasetoropen_mfdatasetmethods, and specifyzarras the format, via theengineargument.

open_mfdataset method and pass in two AWS S3 file references to these two corresponding directories.Create the URLs

date = '2024061218'

hour = '21'

var = 'TMP'

level = '2m_above_ground'

url1 = 's3://hrrrzarr/sfc/' + date + '/' + date + '_' + hour + 'z_anl.zarr/' + level + '/' + var + '/' + level

url2 = 's3://hrrrzarr/sfc/' + date + '/' + date + '_' + hour + 'z_anl.zarr/' + level + '/' + var

print(url1)

print(url2)s3://hrrrzarr/sfc/20211016/20211016_21z_anl.zarr/2m_above_ground/TMP/2m_above_ground

s3://hrrrzarr/sfc/20211016/20211016_21z_anl.zarr/2m_above_ground/TMP

Create the S3 maps from the S3 object store.

fs = s3fs.S3FileSystem(anon=True)

file1 = s3fs.S3Map(url1, s3=fs)

file2 = s3fs.S3Map(url2, s3=fs)Use Xarray’s open_mfdataset to create a Dataset from these two S3 objects.

ds = xr.open_mfdataset([file1,file2], engine='zarr')Examine the dataset.

dsHRRR Grid Navigation:¶

PROJECTION: LCC

ANGLES: 38.5 -97.5 38.5

GRID SIZE: 1799 1059

LL CORNER: 21.1381 -122.7195

UR CORNER: 47.8422 -60.9168lon1 = -97.5

lat1 = 38.5

slat = 38.5

projData= ccrs.LambertConformal(central_longitude=lon1,

central_latitude=lat1,

standard_parallels=[slat,slat],globe=ccrs.Globe(semimajor_axis=6371229,

semiminor_axis=6371229))Globe in Cartopy with these values.Examine the dataset’s coordinate variables. Each x- and y- value represents distance in meters from the central latitude and longitude.

ds.coordsCoordinates:

* projection_x_coordinate (projection_x_coordinate) float64 -2.698e+06 ......

* projection_y_coordinate (projection_y_coordinate) float64 -1.587e+06 ......Create an object pointing to the dataset’s data variable.

airTemp = ds.TMPWhen we examine the object, we see that it is a special type of DataArray ... a DaskArray.

airTempSidetrip: Dask¶

open_mfdataset, the resulting objects are Dask objects.MetPy supports Dask arrays, and so performing a unit conversion is straightforward.

airTemp = airTemp.metpy.convert_units('degC')Verify that the object has the unit change

airTempSimilar to what we did for datasets whose projection-related coordinates were latitude and longitude, we define objects pointing to x and y now, so we can pass them to the plotting functions.

x = airTemp.projection_x_coordinate

y = airTemp.projection_y_coordinateVisualize 2m temperature at an analysis time¶

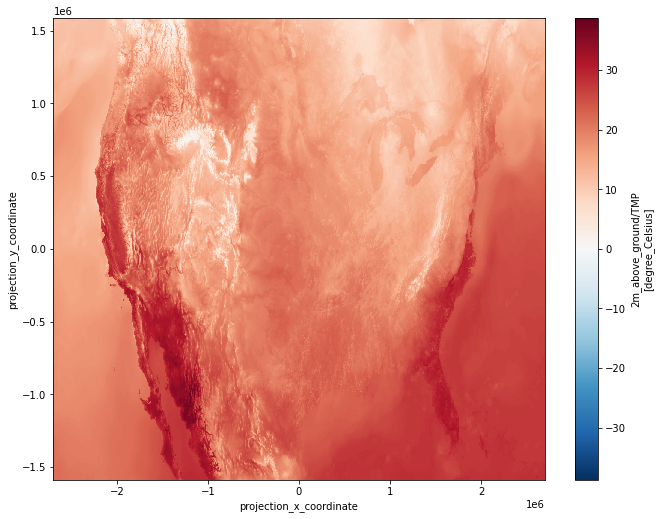

First, just use Xarray’s plot function to get a quick look to verify that things look right.

airTemp.plot(figsize=(11,8.5))

To facilitate the bounds of the contour intervals, obtain the min and max values from this DataArray.

DataArray in Xarray. If we want to perform a computation on this array, e.g. calculate the mean, min, or max, note that we don't get a result straightaway ... we get another Dask array.airTemp.min()compute function to actually trigger the computation.minTemp = airTemp.min().compute()

maxTemp = airTemp.max().compute()minTemp.values, maxTemp.values(array(-4.75, dtype=float16), array(38.75, dtype=float16))Based on the min and max, define a range of values used for contouring. Let’s invoke NumPy’s floor and ceil(ing) functions so these values conform to whatever variable we are contouring.

fint = np.arange(np.floor(minTemp.values),np.ceil(maxTemp.values) + 2, 2)fintarray([-5., -3., -1., 1., 3., 5., 7., 9., 11., 13., 15., 17., 19.,

21., 23., 25., 27., 29., 31., 33., 35., 37., 39.])Plot the map¶

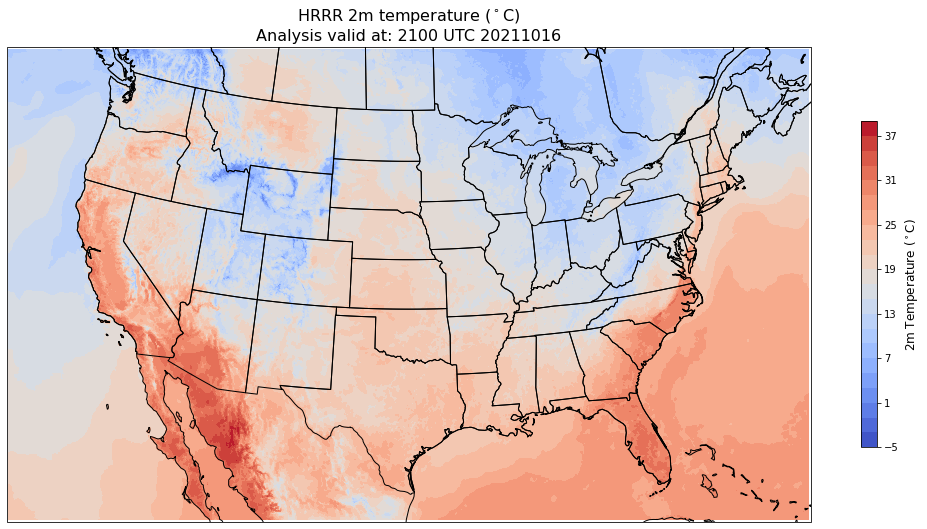

We’ll define the plot extent to nicely encompass the HRRR’s spatial domain.

latN = 50.4

latS = 24.25

lonW = -123.8

lonE = -71.2

res = '50m'

fig = plt.figure(figsize=(18,12))

ax = plt.subplot(1,1,1,projection=projData)

ax.set_extent ([lonW,lonE,latS,latN],crs=ccrs.PlateCarree())

ax.add_feature(cfeature.COASTLINE.with_scale(res))

ax.add_feature(cfeature.STATES.with_scale(res))

# Add the title

tl1 = str('HRRR 2m temperature ($^\circ$C)')

tl2 = str('Analysis valid at: '+ hour + '00 UTC ' + date )

plt.title(tl1+'\n'+tl2,fontsize=16)

# Contour fill

CF = ax.contourf(x,y,airTemp,levels=fint,cmap=plt.get_cmap('coolwarm'))

# Make a colorbar for the ContourSet returned by the contourf call.

cbar = fig.colorbar(CF,shrink=0.5)

cbar.set_label(r'2m Temperature ($^\circ$C)', size='large')

Summary¶

- Xarray can access datasets in Zarr format, which is ideal for a cloud-based object store system such as S3.

- Xarray and MetPy both support Dask, a library that is particularly well-suited for very large datasets.

What’s next?¶

On your own, browse the hrrrzarr S3 bucket. Try making maps for different variables and/or different times.